- Rudraksh.tech

- Posts

- A Comprehensive Guide to Kubernetes Architecture and Cluster Building Methods

A Comprehensive Guide to Kubernetes Architecture and Cluster Building Methods

In today’s fast-paced tech world, Kubernetes has become the gold standard for container orchestration, empowering organizations to scale, manage, and deploy applications efficiently. Understanding Kubernetes architecture and its cluster-building methodologies is critical for anyone looking to leverage its full potential.

This detailed guide dives deep into Kubernetes architecture, explains its components with real-world examples, and outlines various cluster deployment methods to suit different needs. Whether you are a beginner or an advanced Kubernetes user, this article is tailored to enhance your knowledge.

What is Kubernetes?

Kubernetes, also known as K8s, is an open-source platform designed to automate the deployment, scaling, and operation of application containers. Its ability to manage complex applications across clusters of machines makes it indispensable for modern DevOps workflows.

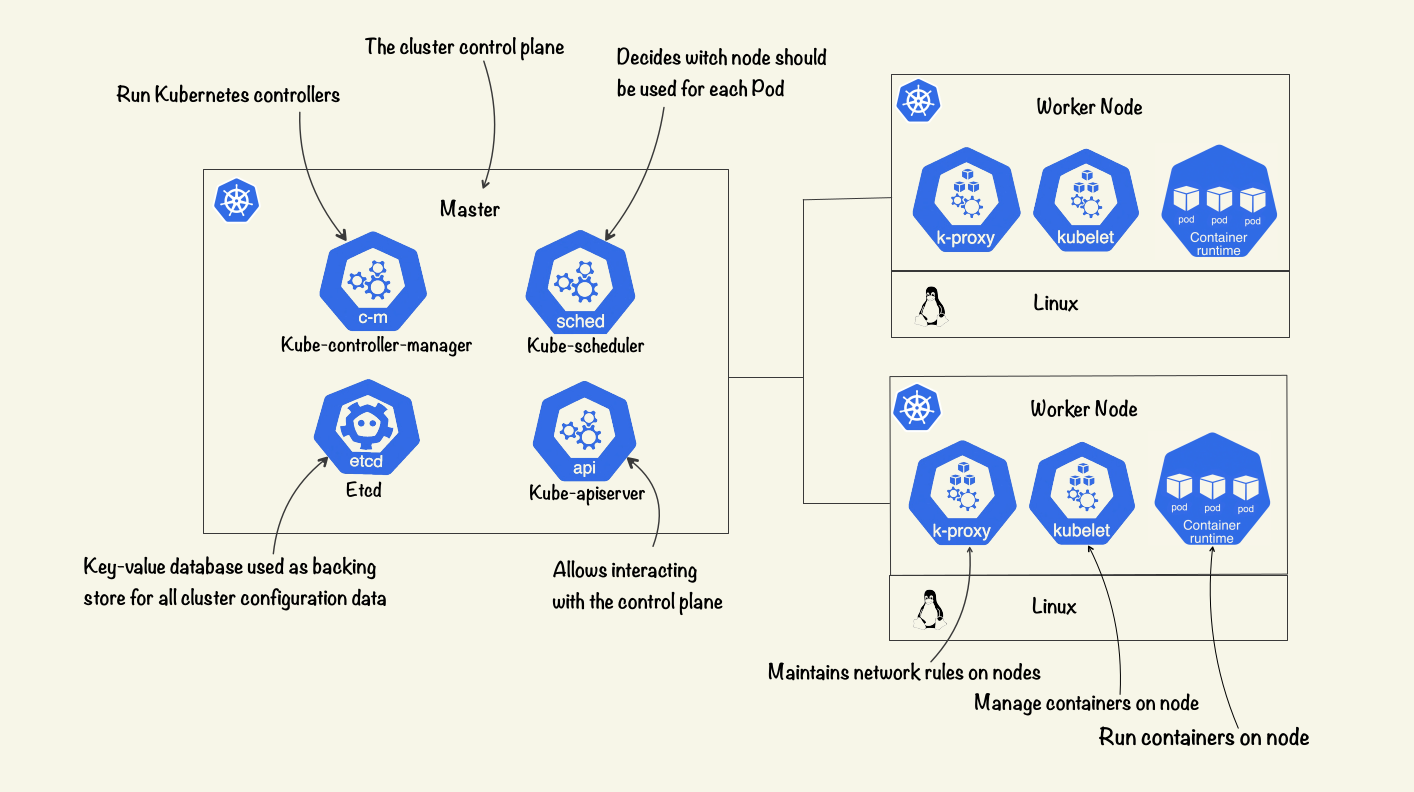

At its core, Kubernetes operates on a master-worker architecture, where each component plays a crucial role in maintaining a seamless application deployment lifecycle.

Kubernetes Architecture Explained

Kubernetes architecture comprises two primary entities: Master Node and Worker Nodes. Let's explore each in detail.

1. Master Node: The Brain of Kubernetes

The master node manages the overall state of the Kubernetes cluster. It ensures that the desired state of the applications and resources is maintained, even in dynamic environments. Key components include:

a. Kube-APIServer

The kube-apiserver is the control plane component that acts as the communication hub for the Kubernetes cluster.

Responsibilities:

Handling API requests (e.g., creating pods, services, or deployments).

Authenticating and routing requests to the appropriate components.

Example: Suppose a developer wants to deploy a new application. They submit a YAML file describing the deployment to the kube-apiserver. The kube-apiserver processes this request and ensures the necessary pods are created and assigned to worker nodes.

b. etcd: The Cluster's Memory

etcd is a distributed key-value store used to store all cluster state data.

Responsibilities:

Keeping track of configuration details, such as pod specifications and network configurations.

Providing high availability and consistency.

Example: If a worker node crashes, etcd ensures the cluster can recover its state by referencing the stored data.

c. Kube-Scheduler: The Planner

The kube-scheduler assigns unassigned pods to nodes based on resource availability and policies.

Responsibilities:

Scheduling pods to nodes with sufficient CPU, memory, and other resources.

Optimizing pod placement for better performance.

Example: A web application pod requiring high CPU is scheduled on a node with enough processing power, ensuring smooth operation.

d. Kube-Controller-Manager: The Overseer

This component runs various controllers to monitor the state of the cluster.

Controllers include:

Node Controller: Checks node health.

Replication Controller: Ensures the desired number of pod replicas are running.

Service Account Controller: Manages service accounts and tokens.

Example: If a node becomes unresponsive, the node controller removes it from the cluster and reassigns workloads.

2. Worker Nodes: The Workhorses of Kubernetes

Worker nodes run the actual application workloads. They host the containers and provide the runtime environment. Key components include:

a. Kubelet: The Pod Manager

The kubelet ensures containers are running as specified by the master node.

Responsibilities:

Communicating with the kube-apiserver for pod assignments.

Reporting the health of running pods.

Example: If a pod crashes, the kubelet restarts it based on the deployment's specifications.

b. Kube-Proxy: The Traffic Manager

Kube-proxy manages networking and ensures communication between services and pods.

Responsibilities:

Configuring iptables rules for service routing.

Enabling pod-to-pod and pod-to-service communication.

Example: When a user accesses a web application via a service, kube-proxy ensures the request reaches the appropriate pod, even if the pod is on a different node.

c. Container Runtime: The Execution Layer

The container runtime is responsible for running containers on each node. Popular runtimes include Docker, containerd, and CRI-O.

Responsibilities:

Pulling container images from registries.

Managing container lifecycles.

Example: A Node.js application container is started using Docker, and kubelet monitors its state.

Building a Kubernetes Cluster: Step-by-Step Methods

Now that we’ve explored Kubernetes architecture, let’s look at different methods to build a Kubernetes cluster based on your needs and resources.

1. Self-Hosted Kubernetes Cluster

This approach involves setting up Kubernetes on your infrastructure, giving you complete control over the environment.

Tools: kubeadm, kops, Rancher, kubespray.

Example:

Use kubeadm to initialize the control plane on a master node.

Add worker nodes using

kubeadm join.

Best For: Enterprises with specific compliance or security requirements.

2. Cloud-Hosted Kubernetes Cluster

Cloud providers like Amazon EKS, Google Kubernetes Engine (GKE), and Azure Kubernetes Service (AKS) offer managed Kubernetes services.

Example:

Deploy a cluster using GKE with a few clicks or a CLI command like:

gcloud container clusters create my-cluster --num-nodes=3The cloud provider manages the control plane while you manage the worker nodes.

Best For: Organizations already leveraging cloud services and looking to reduce operational overhead.

3. Cluster as a Service (CaaS)

CaaS providers like DigitalOcean and Linode simplify cluster management without binding you to a single cloud provider.

Example:

Create a cluster on DigitalOcean using their UI or API, and manage workloads without worrying about underlying infrastructure.

Best For: Startups or small teams looking for ease of use.

4. Containerized Kubernetes

Tools like Minikube or KinD (Kubernetes in Docker) allow you to run Kubernetes clusters in containers, ideal for development and testing.

Example:

Spin up a local cluster with Minikube:

minikube startUse this setup to test application configurations before deploying to production.

Best For: Developers and QA teams.

Conclusion

Kubernetes provides a robust platform for modern application deployment, backed by its scalable architecture and versatile cluster management options. By understanding its components, such as the API server, etcd, and kubelet, alongside various cluster deployment strategies, you can choose the best setup to meet your needs.

Whether you’re a small team exploring Minikube or a large organization deploying on Amazon EKS, Kubernetes empowers you to build resilient and scalable applications with ease. Start your Kubernetes journey today, and unlock the full potential of container orchestration!

Reply